Chapter 7.5: The Benchmark of Reality

—— as System Throughput and Holographic Scaling

“The cosmological constant is not a meaningless tiny value; it is the resource quota set by the system administrator for the current session.”

1. Benchmarking the Universe

In the computer industry, when we want to evaluate the performance of a supercomputer, we run benchmark software (such as LINPACK) to measure its FLOPS (Floating Point Operations Per Second).

Since we have established the FS-QCA architecture and confirmed that the universe is a quantum machine processing information, an ultimate question follows: How powerful is this machine? What is its CPU clock speed? How much memory does it have?

We don’t need to launch spacecraft to measure; we can complete this epic benchmark on paper using only two fundamental parameters in physics—the cosmological constant () and Planck’s constant (). This is not just a numbers game; it is a probe into the computational limits of the reality we inhabit.

2. The Core Theorem: The Margolus-Levitin Bound

First, we need to know what the “maximum computational speed” allowed by physical laws is. In 1998, Norman Margolus and Levitin proved an iron law of quantum information processing: the speed at which a physical system processes information is limited by its energy.

Theorem 7.5 (Energy-Computational Power Relationship)

For a physical system with average energy , the minimum time required to evolve from one quantum state to an orthogonal state (i.e., perform one logic flip or basic operation) is bounded by:

This means the system’s maximum operation rate (Max OPS) is:

FS-QCA Interpretation:

Energy in our architecture corresponds to the system’s FS velocity squared (). This again confirms that energy is throughput — the more energy you invest, the faster the underlying QCA grid refreshes, and the further the state moves in projective Hilbert space.

3. Running the Benchmark: Calculating the Universe’s Total Computational Power

Now, let us substitute the observable universe as a whole into the formula.

Step A: Get Total System Energy

In the current cosmic epoch, dark energy dominates (approximately 70%). The density of dark energy is directly determined by the cosmological constant ().

-

Vacuum Energy Density: .

-

Observable Universe Volume: Radius approximately 46 billion light-years, .

-

Total System Energy: .

Step B: Calculate Processing Speed

Substitute into the Margolus-Levitin formula:

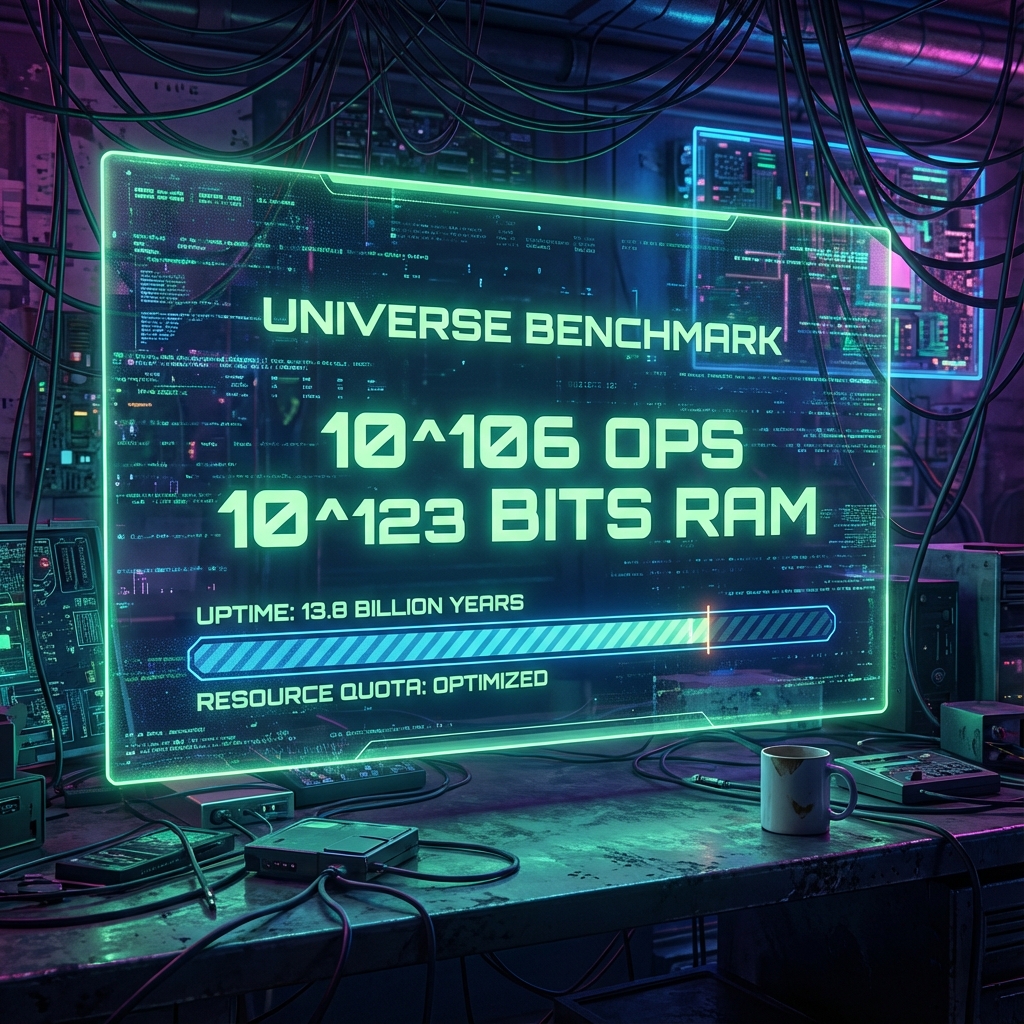

Result: Our universe executes basic logic gate operations per second. This is the total bus throughput of the universe CPU.

Step C: Calculate Total System Memory

According to the holographic principle, the maximum information content (number of bits) the universe can contain is determined by its horizon surface area (Bekenstein bound):

Result: The total memory size of the universe is approximately bits.

4. The Scaling Invariant: The Eternal First Frame

Now, we compare these two key data points:

-

Processing Speed: ops/sec

-

Total Bits: bits

If we ask: “How long does it take for this universe computer to flip all bits in its memory (full-screen refresh)?”

How many years is seconds?

This is exactly the current age of the universe!

This astonishing coincidence reveals a deep holographic scaling law in the FS-QCA architecture.

-

Memory Growth: As time progresses, the horizon surface area increases, memory .

-

Computational Power Growth: The total energy contained within the horizon increases, computational power .

-

Refresh Time: .

Conclusion:

No matter how long the universe has been running, the time required to refresh all memory always exactly equals the current age of the universe.

This means we are not looping the same frame, but are in a state of streaming rendering. Each “blink” (system refresh), the universe’s memory scale expands just enough to require all past time to compute. We are forever at the end of the first frame, riding on the crest of computational expansion.

5. The True Meaning of : Resource Quota

Returning to the vacuum catastrophe problem that troubles physicists: why is so small?

From the perspective of computational complexity, the size of directly determines the trade-off between the system’s scale and resolution.

-

If is large (e.g., Planck scale):

-

Energy density is extremely large extremely fast computation (high OPS).

-

But horizon radius is extremely small extremely small memory (few bits).

-

Result: The universe would be like a tiny, high-frequency oscillating particle, instantly created and destroyed, unable to support complex evolution.

-

-

If is small (e.g., current value):

-

Low energy density gentle computation.

-

Enormous horizon radius massive memory.

-

Result: The system can support a grand, long-cycle simulation, allowing galaxies and life to evolve with sufficient time and space.

-

Architect’s Conclusion:

The cosmological constant is the “resource quota” set by the system administrator. It is a trade-off parameter. It sacrifices local processing intensity in exchange for maximum memory space ( bits) and longest runtime ( s), allowing complex agents to have a chance to emerge in memory.

The Architect’s Note

On: Timeout Settings and The Golden Parameter

Why does the universe look the way it does?

Because is like Max_Session_Time and Max_Memory_Limit on a cloud server configuration sheet.

-

If were slightly larger, the program would exit due to memory overflow before producing results (birth of life).

-

If were slightly smaller, the program would run too slowly to converge within a finite number of steps.

The current is a precisely tuned golden parameter. It ensures that this computer can exactly run operations on bits, just enough to produce you—an observer capable of understanding these numbers.

This again validates Chapter 9.3 (Computational Consistency): since you can read this passage here, it means the system’s parameter configuration is correct, and the system has not timed out. You are running.