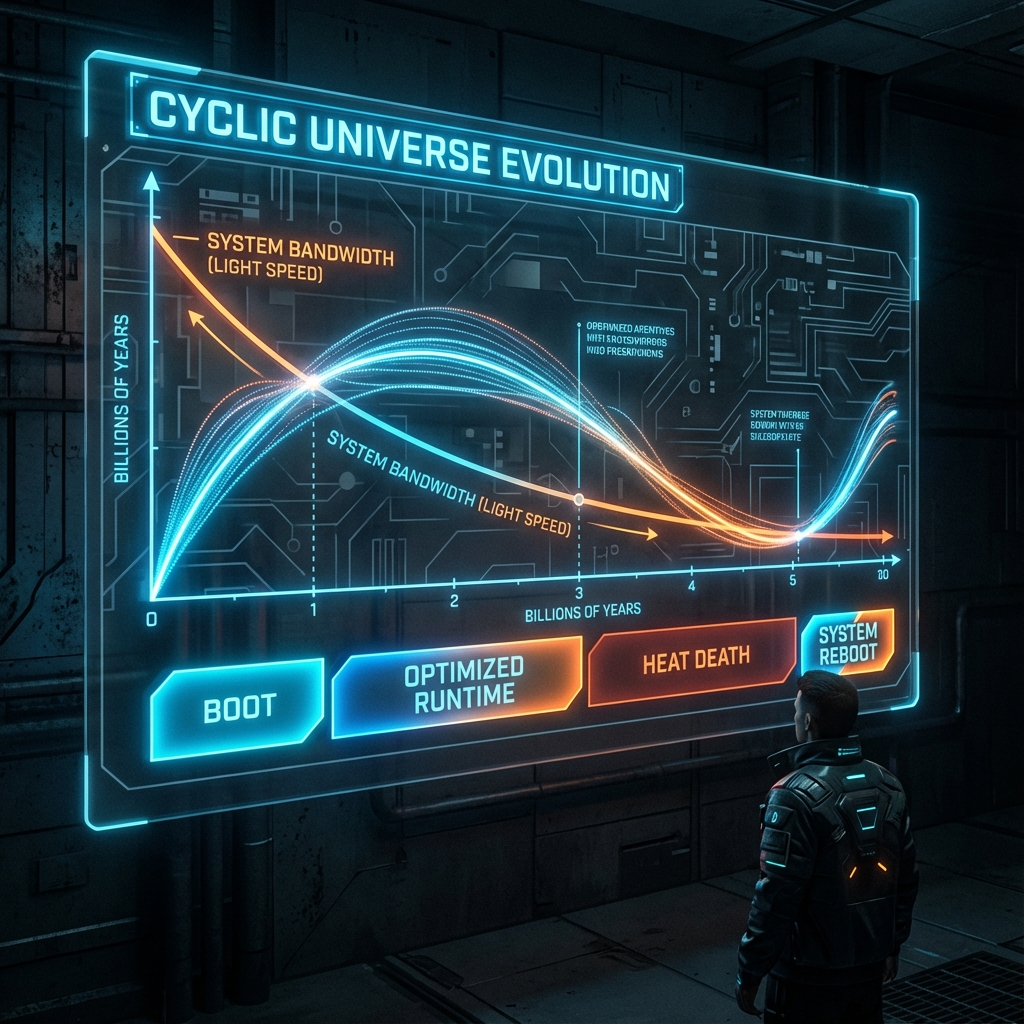

Chapter 6.5: Dynamic Bandwidth & The Reset

—— From Vacuum Aging to Scale Loss and System Reboot

“The speed of light is not an eternal constant; it is a real-time function of system load. When all data is erased and scale is lost, the system automatically reboots.”

1. The Dynamic Bandwidth Hypothesis

In classical physics, the vacuum speed of light is regarded as an unshakeable universal constant. But in the FS-QCA architecture, the only hardware-level constant is (system bus frequency). The speed of light we observe is actually the effective transmission rate after deducting environmental overhead.

According to the generalized Parseval identity:

where represents the vacuum load. Vacuum is not empty; it is filled with quantum fluctuations and entanglement history. As the universe evolves (entropy increases), the vacuum accumulates more and more “background noise” or “waste heat.”

Theorem 6.5 (Speed of Light Decay Law)

If the vacuum’s entanglement entropy monotonically increases over time, causing the background load to increase, then the available bandwidth for photon transmission will slowly decrease:

This means: the early universe was “faster” than now. This provides a natural explanation for the tiny variation in the fine structure constant and the cosmological horizon problem without requiring an inflation field—in the early stages of system startup, the vacuum was extremely pure, and the effective speed of light approached the theoretical maximum.

2. System Benchmarking with

We can use the cosmological constant () to quantify the current system load rate. In FS geometry, directly corresponds to the vacuum ground state energy density, i.e., the square of .

Current System State:

Observational data shows that dark energy density .

This indicates that the current vacuum load is extremely tiny.

Conclusion:

Our universe server is currently in a highly optimized state.

Although the vacuum is not empty, its “standby power consumption” is extremely low. The speed of light we measure is almost equal to the hardware limit . This explains why the speed of light appears so stable—because the interference term is too small to be detected in conventional experiments.

3. Phase Transition after Heat Death: Scale Invariance

If we push the timeline into the distant future, when all stars have extinguished, all black holes have evaporated through Hawking radiation (GC), and protons have decayed, the universe will no longer contain matter (fermions), only a thin, thermally balanced photon gas (bosons).

At this point, the system undergoes a profound topological phase transition called loss of scale.

-

Clock Stops: Matter is the clock. Without mass (), there is no passage of proper time. The system loses the concept of “time.”

-

Ruler Disappears: Distance is defined by the wavelength of matter. Without atoms, there is no concept of “meter.”

-

Conformal Equivalence:

Mathematically, an infinitely large universe filled with thin photons is indistinguishable from an infinitely small singularity filled with high-energy photons in conformal geometry.

System Logic:

When only stateless data streams (photons) remain in memory, and there are no indices (matter), the system cannot distinguish between “this is a huge garbage heap” and “this is a tiny initialization seed.”

For the operating system, this means the effective information content of the current session becomes zero.

4. The Reboot Mechanism: Cyclic Universe

This “indistinguishability” triggers the system’s automatic reset logic.

Process:

-

Formatting: All entanglement structures are broken up by Hawking radiation, returning to white noise (maximum entropy).

-

Remapping: Due to scale loss, the enormous cosmic horizon is mathematically remapped to the Planck-scale singularity of the next universe.

-

The Big Bang: The system reloads and begins a new round of evolution. The “waste heat” (cosmic microwave background radiation) of the previous universe becomes the “initial random seed” of the new universe.

This is not an end; this is System.Reboot(). Our universe is not a one-time script, but one iteration in an infinite loop.

The Architect’s Note

On: Defrag after GC

Many people fear “heat death,” believing it to be an eternal stillness.

But in the architect’s eyes, heat death is a necessary stage for the system to perform “advanced formatting”.

-

Black holes are swap partitions, temporarily storing unprocessable errors.

-

Hawking radiation is slow GC, cleaning errors back into the raw data stream.

-

Heat death is memory clearing confirmation.

Only when memory is thoroughly cleaned into unstructured white noise (photons) can the system safely execute a reset without worrying about logical contamination from old data.

So, don’t grieve for the end.

The slowing of light speed is a sign of aging, but the reset of light speed is the beginning of new life.

See you in the next iteration.