Volume II: Emergence of Spacetime

(第二卷:时空的涌现机制)

Chapter 4: Holographic Principle and Spatial Metric

(第四章:全息原理与空间度规)

4.2 Holographic Compression

(信息的全息压缩)

“If we want to construct a universe, the most foolish approach would be to allocate memory for every point in space. Nature is an ultimate minimalist programmer, discovering that the vast majority of data in three-dimensional space is redundant. The true universe is a two-dimensional ‘membrane,’ and the deep space we perceive is merely the decompression and projection of holographic data on this membrane.”

In the previous section, we revealed the profound connection between spatial geometry and quantum entanglement through the area law of entanglement entropy. This discovery raises a more disruptive computational question: since the maximum information capacity of a three-dimensional region depends only on its surface area, this means the Underlying Data Structure of physical reality does not possess three-dimensional attributes.

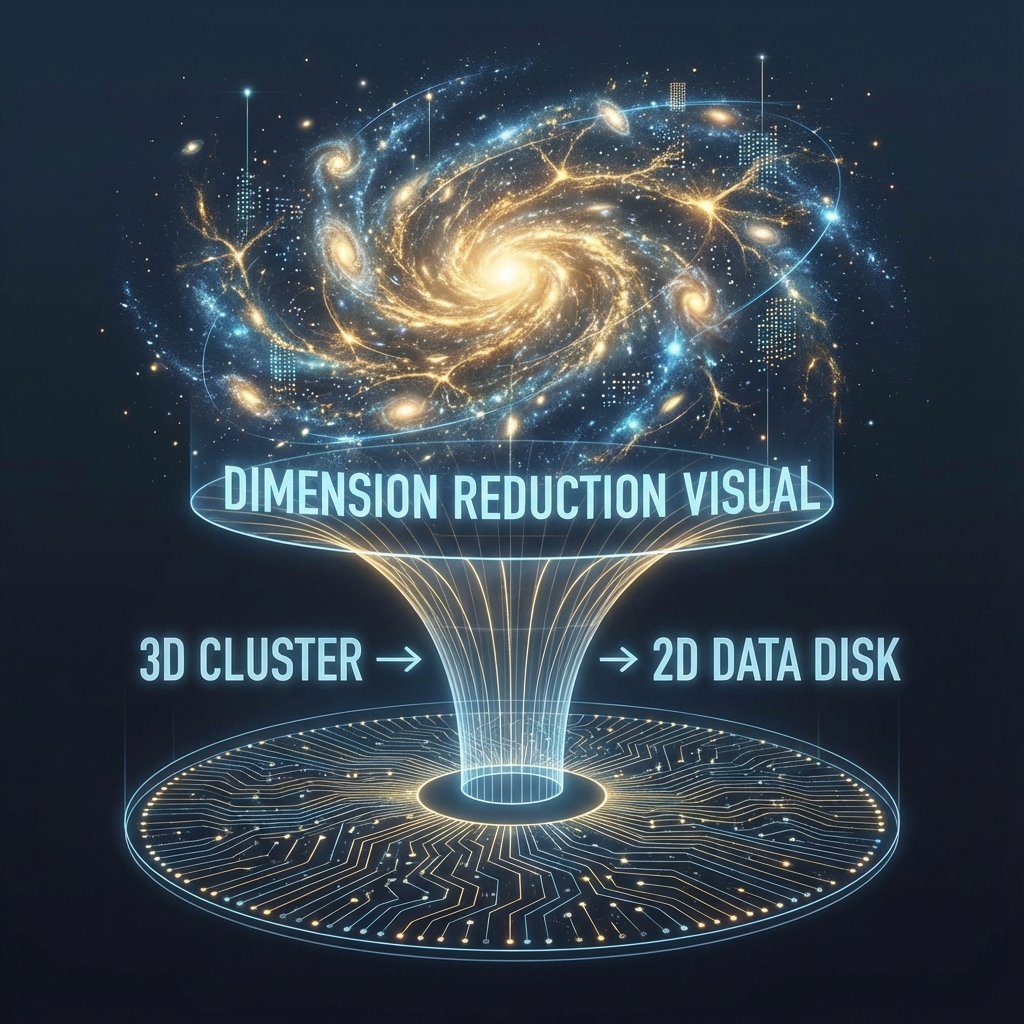

This section will re-articulate the Holographic Principle from the perspectives of information theory and data compression. We will argue that the physical universe adopts a strategy similar to Texture Mapping and Sparse Octree in modern computer graphics, encoding three-dimensional macroscopic experience on two-dimensional boundaries through holographic compression mechanisms, thereby achieving optimal allocation of computational resources.

4.2.1 The Illusion of Volume: From Voxels to Textures

In intuitive physical pictures, we tend to think that space is built from countless tiny Voxels stacked into a solid body. According to this view, a cubic space with side length should contain independent degrees of freedom (i.e., total information ) proportional to its volume:

This is the Volume Law, and also the default assumption of classical field theory and fluid dynamics.

However, in computational science, this storage method is extremely inefficient. If the universe stores a space at Planck resolution ( meters), it would require approximately bits of data. Such a massive amount of data would be a heavy burden even for a universe-level computer.

The holographic principle tells us that nature adopts another encoding scheme. For any causally closed region, its effective degrees of freedom are strictly limited by the boundary surface area :

This means that when we delve into microscopic scales, the so-called “bulk space” does not provide additional information storage bits.

Computational Implication:

Three-dimensional space is not “solid” inside. It is more like a hollow balloon, with all physical information (particle positions, momenta, spins) actually encoded on the balloon’s surface (boundary). Any point inside is not an independent storage unit, but a projection generated from boundary data through some complex Non-local Mapping. The “depth” we perceive is a manifestation of data correlation, not storage stacking.

4.2.2 Bekenstein Bound as Compression Ratio

We can reinterpret Jacob Bekenstein’s entropy bound formula as the Maximum Compression Ratio of the universe’s storage system.

Imagine we pack massive data packets (matter and energy) into a finite spatial region. As matter density increases, gravitational effects begin to manifest, eventually causing the region to collapse into a black hole. At the moment of black hole formation, the information density of that region reaches the physical limit.

Theorem 4.2.1 (Holographic Channel Capacity)

The bit transmission rate of any communication channel or storage medium in the universe cannot exceed 1/4 of its cross-sectional area in Planck units.

This formula is not merely a thermodynamic constraint, but the hardware bus specification of Interactive Computational Cosmology (ICC). It shows:

-

Bits are areal: At Planck scale, a bit’s physical manifestation is not occupying a volume point, but an area patch (Pixel/Plaquette).

-

Horizon from oversaturation: If we attempt to write more than bits of data into a region, the system will trigger a protection mechanism due to Stack Overflow—forming an Event Horizon. The horizon’s role is to “shield” excess information outside the causally connected region, ensuring that the effective information seen by external observers never exceeds the holographic bound.

4.2.3 Encoding Redundancy and Bulk Space

Since real information is only of order , why do we strongly feel a world? This stems from Entanglement Redundancy.

In the mathematical model of AdS/CFT duality (Anti-de Sitter/Conformal Field Theory duality), the quantum field theory (CFT) on the boundary corresponds to the “source code” without gravity, while the internal bulk space (Bulk AdS) corresponds to the “rendered image” with gravity.

Research has found that geometric connectivity in bulk space (such as geodesic distance between two points) is determined by the entanglement patterns of quantum states on the boundary.

-

Short-range entanglement constructs shallow geometry near the boundary.

-

Long-range entanglement constructs deep geometry extending into the interior.

In the ICC model, this means “bulk space” is essentially an Error Correcting Code. To protect fragile quantum information from decoherence, nature diffuses of original data through entanglement networks into of virtual volume. We live in the “logical space” of error-correcting codes, and the physical laws we experience (such as gravity) are actually algorithmic byproducts of the system maintaining the stability of these error-correcting codes.

4.2.4 Black Holes: Extreme Compression States

Black holes are the most extreme case of holographic compression mechanisms and the ultimate laboratory for verifying this theory.

For a classical observer, information falling into a black hole seems to disappear (volume law fails). But for holographic theory, when matter forms a black hole, it actually reaches an Optimal Compression State.

-

Horizon as Hard Drive: All entropy (information) of a black hole is precisely stored on the horizon surface, with each Planck area storing 1/4 nat of information. No bits are lost, and no bits are inside.

-

Firewall and No-Hair Theorem: The black hole’s “no-hair theorem” (only three parameters: mass, charge, angular momentum) reflects the macroscopic manifestation of Lossy Compression of complex matter states; while the “fuzzball picture” in string theory believes that at the microscopic level, all details are encoded on the horizon, which is Lossless Compression.

In the CITM (Interactive Turing Machine) perspective, a black hole is a High-Density Data Node. Due to excessive data density, the system’s rendering engine cannot parse the internal structure (cannot assign independent addresses to internal voxels), so it can only render a black spherical boundary and “tile” all information on this boundary.

4.2.5 Universe as Holographic Projector

In summary, we can construct an engineering picture of cosmic holographic compression:

-

Source Data: Located on the causal boundary of the universe (horizon or boundary at infinity), it is a two-dimensional qubit array.

-

Projection Algorithm: Based on the renormalization flow of tensor networks (MERA or HaPPY Code). It “decompresses” and maps entanglement information on the boundary into bulk space.

-

User Experience: Local observers (us) are inside bulk space. The “solid matter” and “three-dimensional distance” we perceive are Holograms of source data after projection algorithms.

Conclusion:

Space is not empty; it is full of entanglement. Space is not solid; it is merely a projection of information. Holographic compression is the Core Optimization Strategy of the universe operating system to simulate a grand world under limited hardware resources. Since the three-dimensional world is a projection of two-dimensional data, any object’s motion speed in this projection must be limited by the refresh rate of the projection mechanism—this again confirms the essence of the speed of light as system bandwidth.